As you start your Internet of Things (IoT) journey, keep this question in mind: What use is IoT data if you begin your analysis three days after a fire in some expensive machinery actually happened?

Is your system powerful enough to churn through all this data -- which is captured from sensors all around the world, or even just one factory? Will you have enough time in the day to run a query for hours scanning huge amounts of data flooding your big data system with no real clue of what data is actually important? As in most cases, getting the right answer requires asking the right questions.

A huge new trend emerging at the moment is industrialized predictive algorithms -- which can churn through your data and detect anomalies, outliers. Think of it this way: What if you ask the right questions -- and eliminate the need for all of your employees to rush into the system trying to analyze everything.

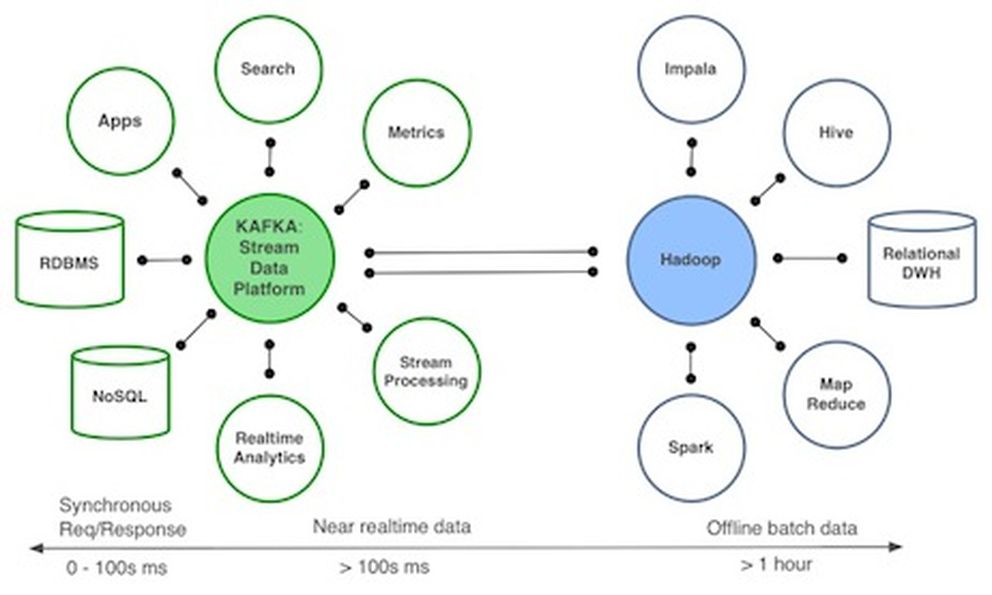

Image: Real-time Internet of Things Example

Real-Time Internet of Things Explained

How could you implement processing these masses of data in your company? The image above is a very simple example by MemSQL on how to streamline the integration between Kafka and Spark, using a Twitter feed. Everything is integrated into the user interface. It takes a few shell script command lines to install/configure the software in Amazon's cloud. It also offers transformation and load capability, the full ETL.

Twitter has also written an event stream processing engine and open sourced it - Storm. The successor of this engine is called Heron, which has just been announced in June during the SIGMOD15. So LinkedIn is not the only one who has worked on these technologies.

Going back to my example of a fire suddenly appearing in machinery, the temperature sensors could have picked up on the unusually high temperature and acted upon it, like a failsafe. How? By filtering out all the normal temperature readings and only feeding through measurements above a certain threshold, once this value is captured in realtime, launch a process which shuts down the machinery and alerts an engineer to inspect the machine via SMS.

The process also places a maintenance order in the ERP system, which the engineer can inspect before going to see what's happened and complete once the machine is fixed. All the sensory information could be available to him at the time of the incident.

So the alternative approach to loading masses of batch data, which mostly can be thrown away, is complex event processing or CEP. CEP relies on a number of techniques:

- Event pattern detection

- Event abstraction

- Event filtering

- Event aggregation and transformation

- Modelling event hierarchies

- Detecting relationships between events

Many technologies exist out there in the market dealing with these masses of IoT data, one more business oriented approach is SAP's well established CEP solution through their acquisition of Sybase.

What's next? Once you have setup complex event processing, you may decide you want the best of both worlds: Let all the masses of data into the Hadoop instance and do complex event stream processing at the same time. Here is a nice video of Capgemini partners who talk about using the above technologies used to create the Insights-Driven Operations solution.

Denis Sproten is a senior solution architect at Capgemini.